| e-SCIENCE | @ | IFIC - Valencia | |||||||||||||

|

|

|

|

|

|

|

|||||||||

| | | HOME | ACTIVE PROJECTS | CONTRIBUTIONS | TRAINING | OLD PROJECTS | PEOPLE | |||||||||

| ACTIVE-PROJECTS | . | |||||

| | EGEE-III | EGI | RED DE e-CIENCIA | ATLAS | PARTNER | METACENTRO | ETC | | ||||||

| EGEE-III | ||||||

Enabling Grids for E-sciencE (EGEE) is Europe's leading grid computing project, providing a computing support infrastructure for over 10,000 researchers world-wide, from fields as diverse as high energy physics, earth and life sciences. In 2009 EGEE is focused on transitioning to a sustainable operational model, while maintaining reliable services for its users. The resources currently coordinated by EGEE will be managed through the European Grid Initiative (EGI) as of 2010. In EGI each country's grid infrastructure will be run by National Grid Initiatives. The adoption of this model will enable the next leap forward in research infrastructures to support collaborative scientific discoveries. EGI will ensure abundant, high-quality computing support for the European and global research community for many years to come. |

||||||

| EGI | ||||||

| The main foundations of EGI are the National Grid Initiatives (NGI), which operate the grid infrastructures in each country. EGI will link existing NGIs and will actively support the setup and initiation of new NGIs. | ||||||

| Red de e-Ciencia | ||||||

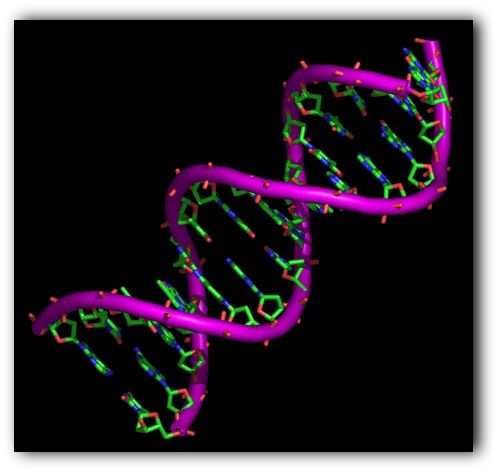

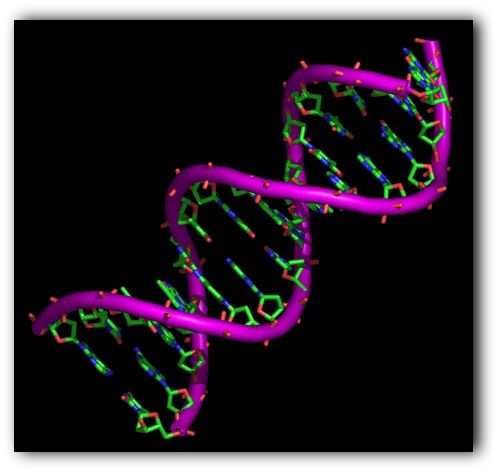

La Red Nacional de e-Ciencia persigue coordinar e impulsar el desarrollo de la actividad científica en España mediante el uso colaborativo de recursos geográficamente distribuidos e interconectados mediante Internet. En la red participan usuarios y expertos en aplicaciones de diversas disciplinas científicas (biocomputación, imagen médica, química computacional, fusión, meteorología, etc.), investigadores en el ámbito de las TIC y centros proveedores de recursos, quedando así representados todos los actores de la e-Ciencia. |

||||||

| ATLAS | ||||||

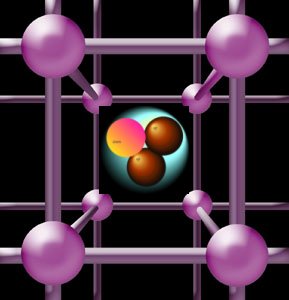

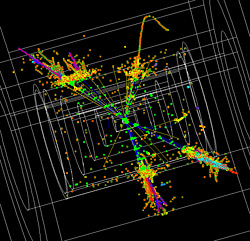

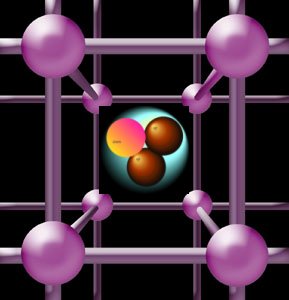

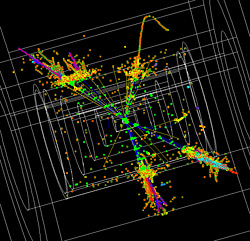

ATLAS is a particle physics experiment at the Large Hadron Collider at CERN. Starting in late 2009/2010, the ATLAS detector will search for new discoveries in the head-on collisions of protons of extraordinarily high energy. ATLAS will learn about the basic forces that have shaped our Universe since the beginning of time and that will determine its fate. Among the possible unknowns are the origin of mass, extra dimensions of space, microscopic black holes, and evidence for dark matter candidates in the Universe. Computing for the ATLAS experiment at the LHC proton-proton collider at CERN will pose a number of new, yet unsolved technological and organisational challenges:

|

||||||

| PARTNER | ||||||

A Particle Training Network for European Radiotherapy (PARTNER) has been established in response to the critical need for reinforcing research in ion therapy and the training of professionals in the rapidly emerging field of hadron therapy. This is an interdisciplinary, multinational initiative, which has the primary goal of training researchers who will help to improve the overall efficiency of ion therapy in cancer treatment, and promote clinical, biological and technical developments at a pan-European level, for the benefit of all European inhabitants. |

||||||

| Metacentro | ||||||

| Metacentro is a project to join actors from the Valencian Region with common interest in GRID technologies. The founder members are the University of Valencia, CSIC and the Valencia Politechnic University. | ||||||

| ETC | ||||||